The specs for gold haven’t been nailed down yet, but 2 routers was mentioned above. Its unclear if he is referring to a high availability setup or not.

GEP Gold Certified Farming Specs (CLOSED)

Hi - Love the fact that you have not chosen a datacenter in/around A’dam. Even though a small country there is so much more to the Netherlands!

With regards to the specs and requirements, I’ll reread the thread and sum it all up, and then close the thread (threads should not be open forever, no one can be created to augment the outcome of others). Then we have to find a way how we can “validate” a farm being gold spec’ed. Give me 24 hours to complete this

Yes they have multiple DC’s in East-Netherlands, but this one has all facilities for sure. Must rent a full rack because of the Ampere power, but that’s no case because there can fit 52U. There is more then cheese and tulps yes, soon threefold capacity will add to that list.

Maybe i can get some confirmation from them, i do have documents regarding ISO certification, and a contract between the DC is best way to go i suggest.

Recap of the original GEP definition, changes/additions marked in italic that summarizes athe open questions and remarks. Please comment/suggest if you feel I missed your contribution. Will try to close this over the weekend.

For clarity Gold certified farming specs are defined against 3nodes and where these 3nodes “live”. It is therefore advisable to create one (or more) farms per location that have 3nodes of a single type (DYI, certified or gold certified. Mixing of types of 3nodes in a single farm is discouraged.

Gold Farming Qualification requirements

Summarized and ordered the requirements per category. Changes and additions are done in italic

-

FARM HARDWARE:

- Hardware purchased from a recognized vendor (approved by DAO). Initially HPE & ThreeFold hardware but this will be expanded to other vendors like Dell, IBM, SuperMicro etc. There is no process defining how to do this. TBD in the coming weeks.

- The 3Nodes need to be certified (can be done by TFTech or other actors on blockchain). There is no process defining how to do this. TBD in the coming weeks.

- Two power supplies or PDU with 2 power feeds which automatically failover. Two complete independent power paths to each 3node, meaning each 3node has to have a redundant power supply connected to independent power feeds.

- Uptime achieved 99.8%. We need more detail around the requirement of this. Uptime is defined per month per server, but how many months can you miss before you lose the gold certified status? Can you loose the certified status per node, or is the whole farm affected?

-

FARM NETWORK: At least Two Internet Service Provider connections. These two network upstream connections should be made available to each and every 3node that is part of the Gold certification. This translates to:

- Two routers per rack. In Tier 3 and up datacenters the network infrastructure is redundant up to the rack. The two in rack routers are a failover setup that uses the available redundant network insfrastructure of a datacenter where the two router have separate cables to the datacenter network. For some datacenters farmers might have a choice from two connectivity suppliers to connect to in the meet-me room.

- Network connection at least 1GBit/sec per TBD nr of CU/SU This will require some further definition. In other threads, amounts are being discussed and once completed we will add them here.

- Minimum 5 IPv4 addresses per server. This means addresses available to attach the 3nodes with a public IP address to the internet (1 per 3node) and/or a pool of IP addresses which is available for VM’s on 3nodes to have a public network connection

-

FARM LOCATION:

- Tier 3 or 4 datacenter certified to ISO 27001

- Geographic decentralization - no more than one full datacenter rack per TBD Region size unless and until utilization in that rack is > 50%. This requires more definition. There are densely populated areas that require more community cloud capacity than scarcely populated areas. Farmers can propose to create more capacity in a single (densely populated area) and still be Gold certified, DAO will decide. We need to finalize this skeleton process over the coming weeks.

Farming reward simulator.

See HERE

The gold certified farming is updated in this calculator (50% more farming reward and better SU calculation price). Please note that each Certified farm will have to be approved by the TFGRID DAO.

TODO

link to GEP to approve specs of new TFGRID DAO improvements

Great progress! Seems that we are getting closer to pass the programm. Thanks @weynandkuijpers!

Overall that looks very good to me… I’d just like to comment on two things.

Here I would suggest to say that “…one or more specific farm(s) per location…” should be created. Multiple farms in one DC rack would give the opportunity for farmers to join together without having to share the same farm so rewards do not need to be splitt up between the co-farming partners. Since the specs will be defined against 3nodes it should be irrelevant whether or not they are based in only one farm or multiple farms. But of course Mixing of types of 3nodes in a single or multiple farm(s) running in one DC location should not be done.

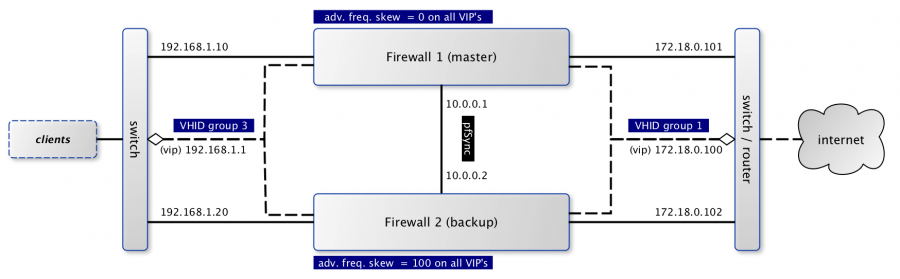

I’ve been tripping over this since the topic was raised. I totaly agree that there should be maximum redundance as possible. But I’m unhappy with the definition of simply two routers per rack and I’m not sure what this is refering to in detail. I doubt that just having two routers would provide significantly more reliability to the setup. Let me try to explain my thougths on this: ISO 27001 (Tier 3 and higher) certification requires DCs to provide fail-safe internet connections respectively redundant networking infrastructure in general (as well as redundance/resiliency for any other technical aspect like power supply, cooling etc.). F. e. in our case the DC has two seperate feeds to the building connected to different backbones. The fail-safe networking infrastructure happens on multiple levels before even reaching the rack. With this in mind a second router would not give additional reliability except failover backup in case of hardware failures of the router(s) itself. If this is the only reason why a secound router should be used I’m fine with that. Also I think it would be much more efficient to think of precautions in order to reduce downtime in case of router hardware failure than providing a high availabilty router cluster (if thats what two routers per rack refer to). But even if we do so, when you think this through, the setup is still left with single-point-of-failure source(s): the switche(s). In order to overcome this problem it would be neccessary to also provide two switches (for LAN and physical public IP network each) and use link aggregation on the 3nodes to bundle NIC ports and connect those to separate switches. This would require corresponding network configurations on 3nodes and I doubt that this is already implemented in z-os. But that’s another story.

As far as I know the common way to setup a high availabilty router/firewall cluster with automatic and seamless failover would be using CARP (Common Address Redundancy Protocol). When using two separate internet connections (two different ISP) this comes with a highly ineffient way of how bandwith would be used. Since CARP uses one router as master and the second one as backup the seperate uplinks could not be used together (in compare to when using load balanced dual WAN f. e.). Apart from not every DC provides cross-connecting services for ISP uplinks from thrid parties. So this would only be bandwith efficient when both routers use the same WAN side uplink (maybe with a second gateway) but this would leave the switch/router/gateway on WAN side as a single-point-of-failure too.

So… I’d love to know how a setup of two routers should be configured in order to increase availabilty/resiliency. I think that it’s also important that the router hardware itself is reliable. We use a Dell R620 with redunant power supply units, dual CPU and raid-controller with a RAID-1 LUN on enterprise SSDs as router hardware with OPNsense as OS installed (I know this is absolutly oversized). We have an identical server ready (installed and patched) that we can use as backup. For now we would have to do this manualy but can be done in minutes. If required by gold certified framing specs we could set them both up as a high availabilty cluster if thats what will be required.

As I’m not on expert I may be overcomplicating this. Would be great if we could define the requirement of having two routers or two ISPs in more detail. What do you think?

Dany beat me to most of the comments. 2 routers, each on a different ISP makes little sense. This is the way to do it. https://docs.netgate.com/pfsense/en/latest/recipes/high-availability-multi-wan.html

2 WAN’s on a HA router can be setup as failover or load balanced. Its up to TF to tell us which configuration should be used. There are pros and cons to each.

in addition to my previous post I would like to raise the question if requirements for At least Two Internet Service Provider connections as well as Two routers per rack (one dedicated per upstream connection to the internet) need to be part of the requirements at all. As long as there is a required uptime of 99.8% to be achieved it’s the farmers responsibility how to get there and they will take the neccessary precautions and will propably build up redundant networking structures. Especially when there is a risk of loosing the gold certified status because of not meeting the required uptime on multiple months in a row. I mean 99.8% equals less then 2h downtime per month. That’s not to easy to accomplish at all. And there is more to be taken into account then just the routers. As mentioned in previous post I am much more worried about switches as a single-point-of-failure then our router(s) or internet uplink. And because of the missing link aggregation ability in z-os I don’t see how to get redundance there.

Done and done. Both points integrated in the summary (added in italic, please check).

Good point(s). The dual router / switch requirement is redundant to a degree with the 99.8% uptime requirement. The what is clear: 99.8% uptime achieved, should we specify the “how”? I think you might have a point - it’s more a “best practise” statement then anything else. Let me sit on this for a little.

@weynandkuijpers is there already a GEP to approve specs?

I have 2 location’s(DC’s) to aprove.

I have both same specs in both locations, only in one i’m using bit more enterprise ssd.

Actually i also saw this post about 100% renewable energy, this is 1 DC for sure the case the other one i have to check. Both have certification for that.

Actually just checked: there both 100% renewable energy.

100% renewable energy on a physical level, or just on a guarantee of origin level? Cause the later does not really contribute anything of value to our sweet and beautfiful planet (greenwashing). I’d rather see ThreeFold go down the route of actually making a difference in this regard (I proposed the GEP). But in the end the community will have to decide how they would like this to play out.

It says 100% green energy, so I suppose it’s from windmill’s and sun etc. I can ofcourse ask, but this Extra rewards is there already a GEP to certified it?

You came with DOA proposal right? Since most people agreed (71%), what’s the next step

That’s what almost everybody assumes when it says 100% green energy. Greenwashing is there for a reason and it serves it’s intended purpose in many cases. I doubt that their energy is completely green on a physical level (unless they are by any chance powered by hydro e.g. in northern Europe or similar). Anyway you should definitely ask them!

@weynandkuijpers has told me he will rewrite the detailed rules (I assume similar to his post in this thread) and create a feature request. From there the process goes it’s natural way (I assume).

@weynandkuijpers any update on the qualifying process for Gold Certified farming?

I think we can do it the same as the validators right like this?

The DC is offcourse not free and aquires with all the requirements some commitment from farmer side.

Who can i contact to maybe speed this up a little?

If i don’t see it wrong it just a matter of opening a vote right?

My units are already certfied makes it maybe bit easier.

After receiving rewards for last month I checked the uptime of our nodes and had to realize that there is some variation in uptime that I can’t explain/understand. The variations are very tiny… but would become dramaticly relevant when it comes to gold certified farming and the requirement of 99.8% uptime.

Within last month I was playing aroung with 2 (out 38) nodes for public IP configuration and had to reboot those nodes a couple of time. I wanted to check how reboots have impact on uptime and had to find out that those nodes had an uptime of 99.98% but others that were not even touched in any way have lower uptimes (!!). Some of them are below 99.8% although I can guarantee that they were up and running the whole time without any interruption. I mean all nodes share the same rack… same internet connection…same power source. I checked log files on those servers (although I’m monitoring them all the time anyway) and I can 100% assure that there has not been a second of downtime.

As I said… the variations are very tiny… and take place in the first and second digit behind the 99%. But on a couple of nodes the uptime is below of the mentioned requirement (lowest is 99.5%). I have no explanation and I’m a bit worried in regard to the gold certified farming.

Are there other farmers who experience this kind of variation in uptime without any reasonable cause?

Hé Dany, are you using a uptime monitor?

I almost positive the uptime reporting has an issue. Reports low.

Your units are already certified? Those wouldn’t be eligible for gold certified since those would be titans.

…or they would be Pekings!